I always am having to look up RAID levels, so I threw this together to keep them all in one place. It’s from various places around the web. Mostly Wikipedia, its been a while since I threw it together, was cleaning up html files and thought I’d post it.

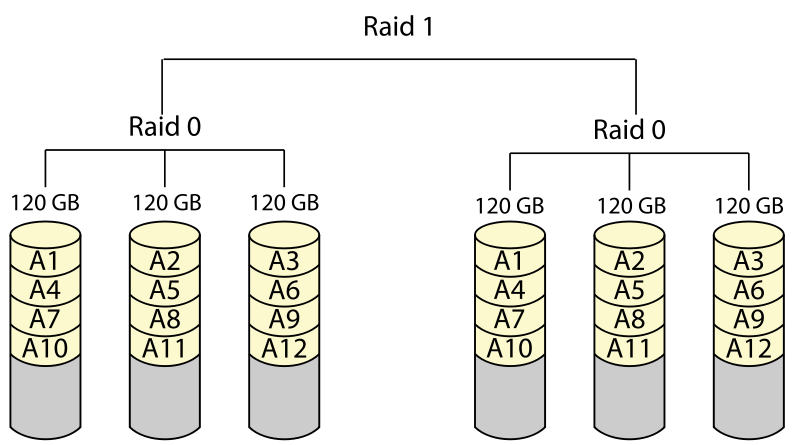

RAID 0+1

A RAID 0+1 (also called RAID 01), is a RAID level used for both replicating and sharing data among disks. The minimum number of disks required to implement this level of RAID is 3 (first, numbered chunks on all disks are build (like in RAID 0) and then every odd chunk number is mirrored with the next higher even neighbor) but it is more common to use a minimum of 4 disks. The difference between RAID 0+1 and RAID 1+0 is the location of each RAID system, RAID 0+1 is a mirror of stripes. The usable capacity of a RAID 0+1 array is (N/2) * Smin, where N is the total number of drives (must be even) in the array and Smin is the capacity of the smallest drive in the array.

RAID 0+1

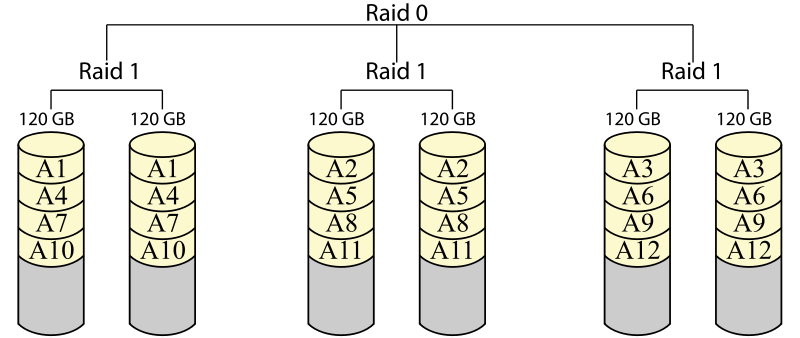

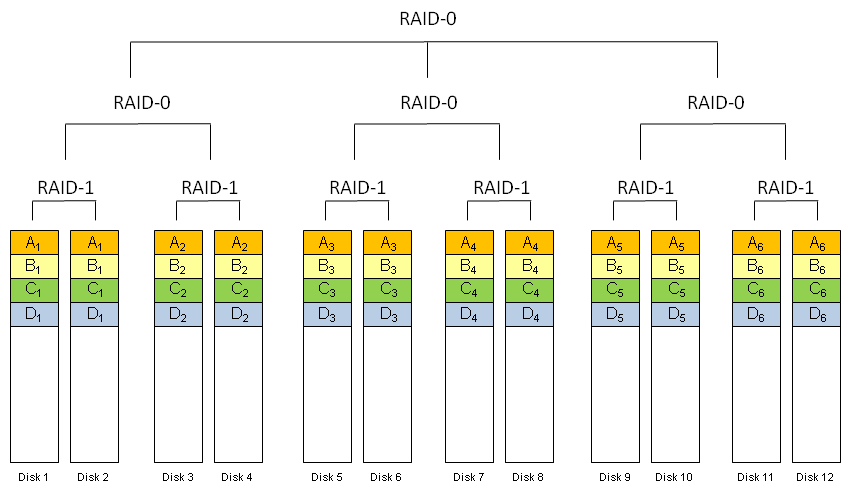

RAID 10

A RAID 1+0, sometimes called RAID 1&0, or RAID 10, is similar to a RAID 0+1 with exception that the RAID levels used are reversed RAID 10 is a stripe of mirrors. RAID 10 as recognized by the storage industry association and as generally implemented by RAID controllers is a RAID 0 array of mirrors (which may be two way or three way mirrors) and requires a minimum of 4 drives. Linux “RAID 10” can be implemented with as few as two disks. Implementations supporting two disks such as Linux RAID10 offer a choice of layouts, including one in which copies of a block of data are “near” each other or at the same address on different devices or predictably offset: Each disk access is split into full-speed disk accesses to different drives, yielding read and write performance like RAID0 but without necessarily guaranteeing every stripe is on both drives. Another layout uses “a more RAID0 like arrangement over the first half of all drives, and then a second copy in a similar layout over the second half of all drives – making sure that all copies of a block are on different drives.” This has high read performance because only one of the two read locations must be found on each access, but writing requires more head seeking as two write locations must be found. Very predictable offsets minimize the seeking in either configuration. “Far” configurations may be exceptionally useful for Hybrid SSD with huge caches of 4 GB (compared to the more typical 64MB of spinning platters in 2010) and by 2011 64GB (as this level of storage exists now on one single chip). They may also be useful for those small pure SSD bootable RAIDs which are not reliably attached to network backup and so must maintain data for hours or days, but which are quite sensitive to the cost, power and complexity of more than two disks. Write access for SSDs is extremely fast so the multiple access become less of a problem with speed: At PCIe x4 SSD speeds, the theoretical maximum of 730 MB/s is already more than double the theoretical maximum of SATA-II at 300MB/s.

RAID10

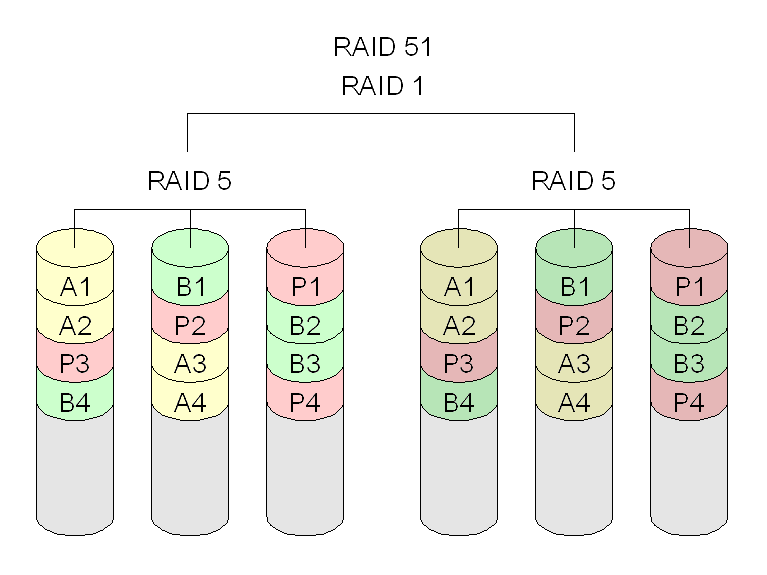

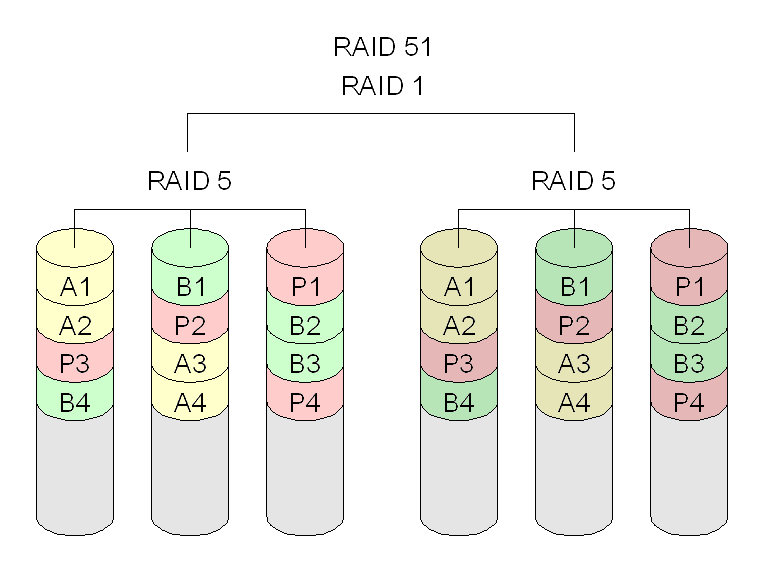

RAID Levels 1+5 (15) and 5+1 (51)

RAID 1+5 and 5+1 might be sarcastically called “the RAID levels for the truly paranoid”. :^) The only configurations that use both redundancy methods, mirroring and parity, this “belt and suspenders” technique is designed to maximize fault tolerance and availability, at the expense of just about everything else. A RAID 15 array is formed by creating a striped set with parity using multiple mirrored pairs as components; it is similar in concept to RAID 10 except that the striping is done with parity. Similarly, RAID 51 is created by mirroring entire RAID 5 arrays and is similar to RAID 01 except again that the sets are RAID 5 instead of RAID 0 and hence include parity protection. Performance for these arrays is good but not very high for the cost involved, nor relative to that of other multiple RAID levels. The fault tolerance of these RAID levels is truly amazing; an eight-drive RAID 15 array can tolerate the failure of any three drives simultaneously; an eight-drive RAID 51 array can also handle three and even as many as five, as long as at least one of the mirrored RAID 5 sets has no more than one failure! The price paid for this resiliency is complexity and cost of implementation, and very low storage efficiency. The RAID 1 component of this nested level may in fact use duplexing instead of mirroring to add even more fault tolerance.

RAID 51

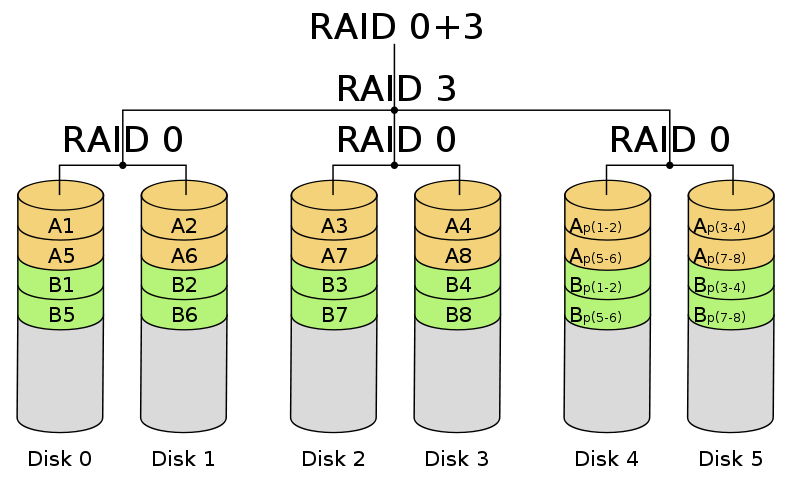

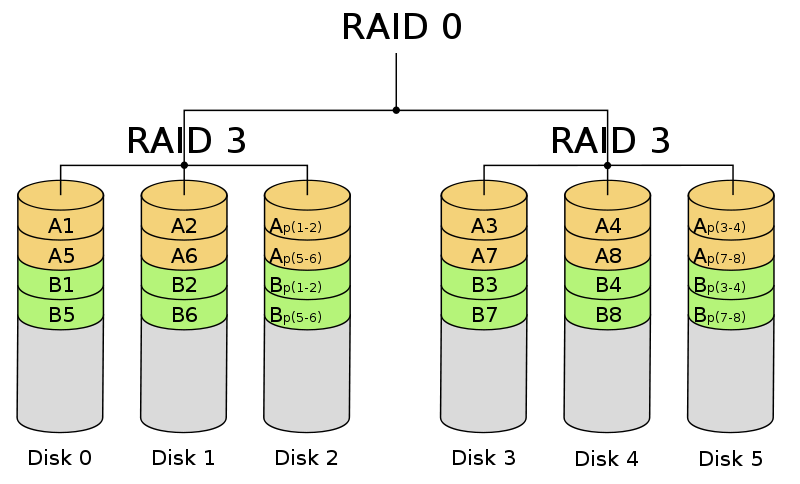

RAID 0+3

RAID level 0+3 or RAID level 03 is a dedicated parity array across striped disks. Each block of data at the RAID 3 level is broken up amongst RAID 0 arrays where the smaller pieces are striped across disks.

RAID 0+3

RAID 30

RAID level 30 is also known as striping of dedicated parity arrays. It is a combination of RAID level 3 and RAID level 0. RAID 30 provides high data transfer rates, combined with high data reliability. RAID 30 is best implemented on two RAID 3 disk arrays with data striped across both disk arrays. RAID 30 breaks up data into smaller blocks, and then stripes the blocks of data to each RAID 3 raid set. RAID 3 breaks up data into smaller blocks, calculates parity by performing an Exclusive OR on the blocks, and then writes the blocks to all but one drive in the array. The parity bit created using the Exclusive OR is then written to the last drive in each RAID 3 array. The size of each block is determined by the stripe size parameter, which is set when the RAID is created. One drive from each of the underlying RAID 3 sets can fail. Until the failed drives are replaced the other drives in the sets that suffered such a failure are a single point of failure for the entire RAID 30 array. In other words, if one of those drives fails, all data stored in the entire array is lost. The time spent in recovery (detecting and responding to a drive failure, and the rebuild process to the newly inserted drive) represents a period of vulnerability to the RAID set.

RAID 30

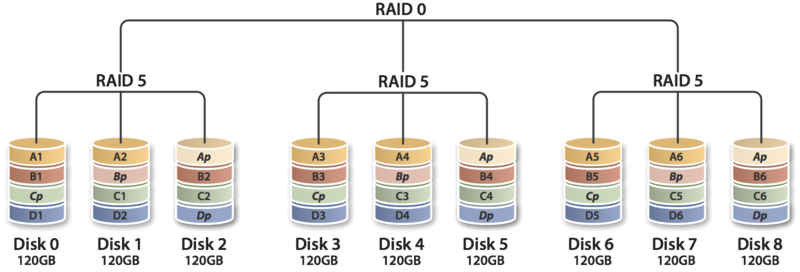

RAID 50

A RAID 50 combines the straight block-level striping of RAID 0 with the distributed parity of RAID 5.[1] This is a RAID 0 array striped across RAID 5 elements. It requires at least 6 drives. Below is an example where three collections of 240 GB RAID 5s are striped together to make 720 GB of total storage space: One drive from each of the RAID 5 sets could fail without loss of data. However, if the failed drive is not replaced, the remaining drives in that set then become a single point of failure for the entire array. If one of those drives fails, all data stored in the entire array is lost. The time spent in recovery (detecting and responding to a drive failure, and the rebuild process to the newly inserted drive) represents a period of vulnerability to the RAID set. In the example below, datasets may be striped across both RAID sets. A dataset with 5 blocks would have 3 blocks written to the first RAID set, and the next 2 blocks written to RAID set 2. RAID 50 improves upon the performance of RAID 5 particularly during writes, and provides better fault tolerance than a single RAID level does. This level is recommended for applications that require high fault tolerance, capacity and random positioning performance. As the number of drives in a RAID set increases, and the capacity of the drives increase, this impacts the fault-recovery time correspondingly as the interval for rebuilding the RAID set increases.

RAID 50

RAID 51

A RAID51 or RAID5+1 is an array that consists of two RAID 5’s that are mirrors of each other. Generally this configuration is used so that each RAID5 resides on a separate controller. In this configuration reads and writes are balanced across both RAID5s. Some controllers support RAID51 across multiple channels and cards with hinting to keep the different slices synchronized. However a RAID51 can also be accomplished using a layered RAID technique. In this configuration, the two RAID5’s have no idea that they are mirrors of each other and the RAID1 has no idea that its underlying disks are RAID5’s. This configuration can sustain the failure of all disks in either of the arrays, plus up to one additional disk from the other array before suffering data loss. The maximum amount of space of a RAID51 is (N) where N is the size of an individual RAID5 set.

RAID 51

RAID 05 (RAID 0+5)

A RAID 0 + 5 consists of several RAID0’s (a minimum of three) that are grouped into a single RAID5 set. The total capacity is (N-1) where N is total number of RAID0’s that make up the RAID5. This configuration is not generally used in production systems.

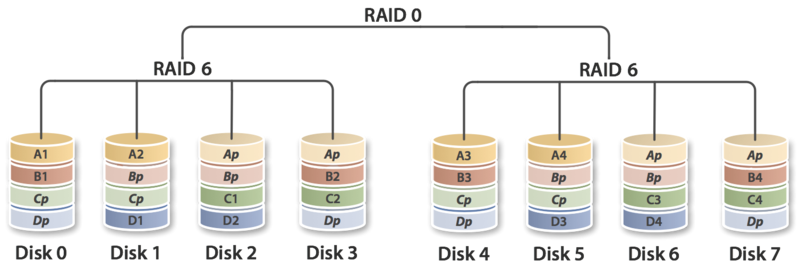

RAID 60 (RAID 6+0)

A RAID 60 combines the straight block-level striping of RAID 0 with the distributed double parity of RAID 6. That is, a RAID 0 array striped across RAID 6 elements. It requires at least 8 disks.[2] Below is an example where two collections of 240 GB RAID 6s are striped together to make 480 GB of total storage space: As it is based on RAID 6, two disks from each of the RAID 6 sets could fail without loss of data. Also failures while a single disk is rebuilding in one RAID 6 set will not lead to data loss. RAID 60 has improved fault tolerance, any two drives can fail without data loss and up to four total as long as it is only two from each RAID6 sub-array. Striping helps to increase capacity and performance without adding disks to each RAID 6 set (which would decrease data availability and could impact performance). RAID 60 improves upon the performance of RAID 6. Despite the fact that RAID 60 is slightly slower than RAID 50 in terms of writes due to the added overhead of more parity calculations, when data security is concerned this performance drop may be negligible.

RAID60

RAID 100

A RAID 100, sometimes also called RAID 10+0, is a stripe of RAID 10s. This is logically equivalent to a wider RAID 10 array, but is generally implemented using software RAID 0 over hardware RAID 10. Being “striped two ways”, RAID 100 is described as a “plaid RAID”. The major benefits of RAID 100 (and plaid RAID in general) over single-level RAID is spreading the load across multiple RAID controllers, giving better random read performance and mitigating hotspot risk on the array. For these reasons, RAID 100 is often the best choice for very large databases, where the hardware RAID controllers limit the number of physical disks allowed in each standard array. Implementing nested RAID levels allows virtually limitless spindle counts in a single logical volume. This triple-level Nested RAID configuration seems to be a good place to start our examination of triple Nested RAID configurations. It takes the popular RAID-10 configuration and adds on another RAID-0 layer. Remember that we want to put the performance RAID level ‘last’ in the Nested RAID configuration (at the highest RAID level). The primary reason is that it helps reduce the number of drives involved in a rebuild in the event of the loss of a drive. RAID-100 takes several (at least two) RAID-10 configurations and combines them with RAID-0.

RAID 100

This is just a sample layout illustrating a possible RAID-100 configuration. Remember that the Nested RAID layout goes from the lowest level (furthest left number in the RAID numbering), to the highest level (furthest right in the RAID numbering). So RAID-100 starts with RAID-1 at the lowest level (closest to the drives) and then combines the RAID-1 pairs with RAID-0 in the intermediate layer resulting in several RAID-0 groups (minimum of two). Then the intermediate RAID-0 groups are combined into a final RAID-0 group (a single RAID-0 group).

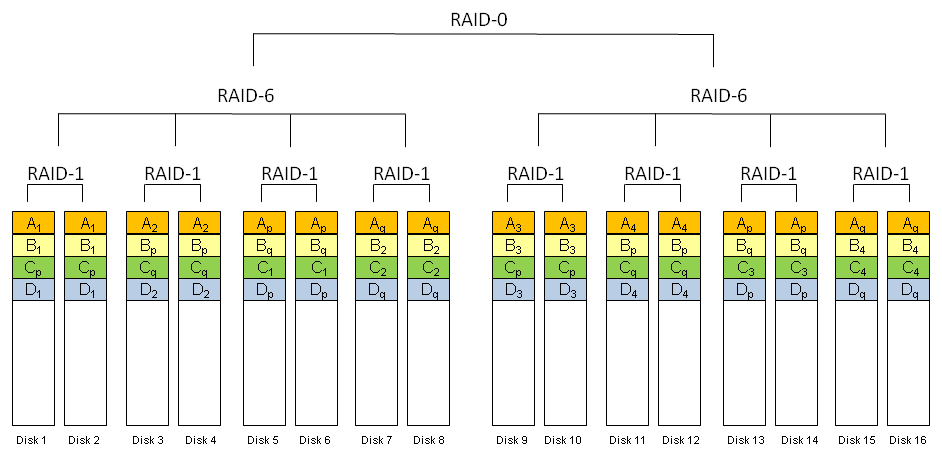

RAID-160

With nested RAID-5 and RAID-6, you could lose up to five drives in some configurations without losing access to data. That is an amazing amount of data protection! Moreover, you have great read performance with RAID-16 but the write performance and the storage efficiency can be quite low. As an example of a three-level Nested RAID configuration that balances performance and redundancy, I created a three level RAID configuration, RAID-160, that attempts to build on the great data redundancy of RAID-16 and add back some performance and storage efficiency. RAID-160 starts with RAID-1 pairs at the lowest level. Then the intermediate layer (RAID-6), takes four of these pairs per intermediate RAID-6 group (need at least two intermediate RAID-6 groups). The top RAID layer combines the intermediate RAID-6 layers with RAID-0 to gain back some write performance and hopefully some storage efficiency. Figure 2 is the smallest RAID-160 configuration which uses sixteen drives.

RAID160

This is just a sample layout illustrating how a RAID-160 configuration is laid out. Remember that the layout goes from the lowest level (furthest left number in the RAID numbering), to the highest level (furthest right in the RAID numbering). So RAID-160 starts with RAID-1 at the lowest level (closest to the drives) that has pairs of drives in RAID-1 (I’m assuming that RAID-1 happens with two drives). Then the RAID-1 pairs are combined using RAID-6 in the intermediate layer to create RAID-6 groups (at least two are needed). Since RAID-6 requires at least four “drives” you need at least four RAID-1 pairs to create an intermediate RAID-6 group. Finally the RAID-6 groups are combined at the highest level using RAID-0 (a single RAID-0 group). As with RAID-100 this configuration can make sense when you use multiple RAID cards that are capable of RAID-16. In the case of Figure 2, you use two RAID cards capable of RAID-16 and then combine them at the top level with software RAID-0 (i.e. RAID that runs in the Linux kernel). This makes sense for RAID-160 because RAID-6 requires a great deal of computational power and splitting drives into multiple RAID-6 groups each with their own RAID processor helps improve overall RAID performance. The fault tolerance of RAID-160 is based on that of RAID-16 and is five drives. You can lose two RAID-1 pairs within one RAID-6 group and still retain access to the data. You can then lose a fifth drive that is part of a third RAID-1 pair in the same RAID-6 group. Then if you lose it’s mirror (the sixth drive), you lose the RAID-6 group and RAID-0 at the highest level goes down.

RAID-666

RAID-666, requires four drives per RAID-6 at the lowest level, followed by four RAID-6 groups (that each use RAID-6) in the intermediate layer, that are combined at the highest level by RAID-6. So the result is that at a minimum 64 drives are required for a RAID-666 configuration (444).

RAID-111

RAID-111, uses three levels of drive mirroring. The minimum configuration requires eight drives (222), only one of which is used for storing real data (the other 7 drives are used for mirroring). That’s a storage efficiency of only 12.5%!! However, you can lose up to seven drives without losing access to your data.